Browse By Unit

Synthesis 1 (Artificial Intelligence)

12 min read•june 18, 2024

AP English Language Free Response Synthesis for Artificial Intelligence

👋 Welcome to the AP English Lang FRQ: Synthesis 1 (Artificial Intelligence). These are LOOOOONG questions, so grab some paper and a pencil, or open up a blank page on your computer.

⚠️ (Unfortunately, we don't have an Answers Guide or Rubric for this question, but it can give you an idea of how a Synthesis FRQ might show up on the exam.)

⏱ The AP English Language exam has 3 free-response questions, and you will be given 2 hours and 15 minutes to complete the FRQ section, which includes a 15-minute reading period. (This means you should give yourself ~15 minutes to read the documents and ~40 minutes to draft your response.)

- 😩 Getting stumped halfway through answering? Look through all of the available resources on Synthesis.

- 🤝 Prefer to study with other students working on the same topic? Join a group in Hours.

Setup

In the 21st century, artificial intelligence (A.I.) technology has grown rapidly in regards to both its societal reach and its capabilities. While many scientists and politicians warn of the dangers of unchecked artificial intelligence, others believe that A.I. should not be regulated by the government.

Guidelines

Carefully read the following six sources, including the introductory information for each source. Write an essay that synthesizes material from at least three of the sources and develops your position on the extent to which artificial intelligence should be regulated.

- Document 1 (Bossman)

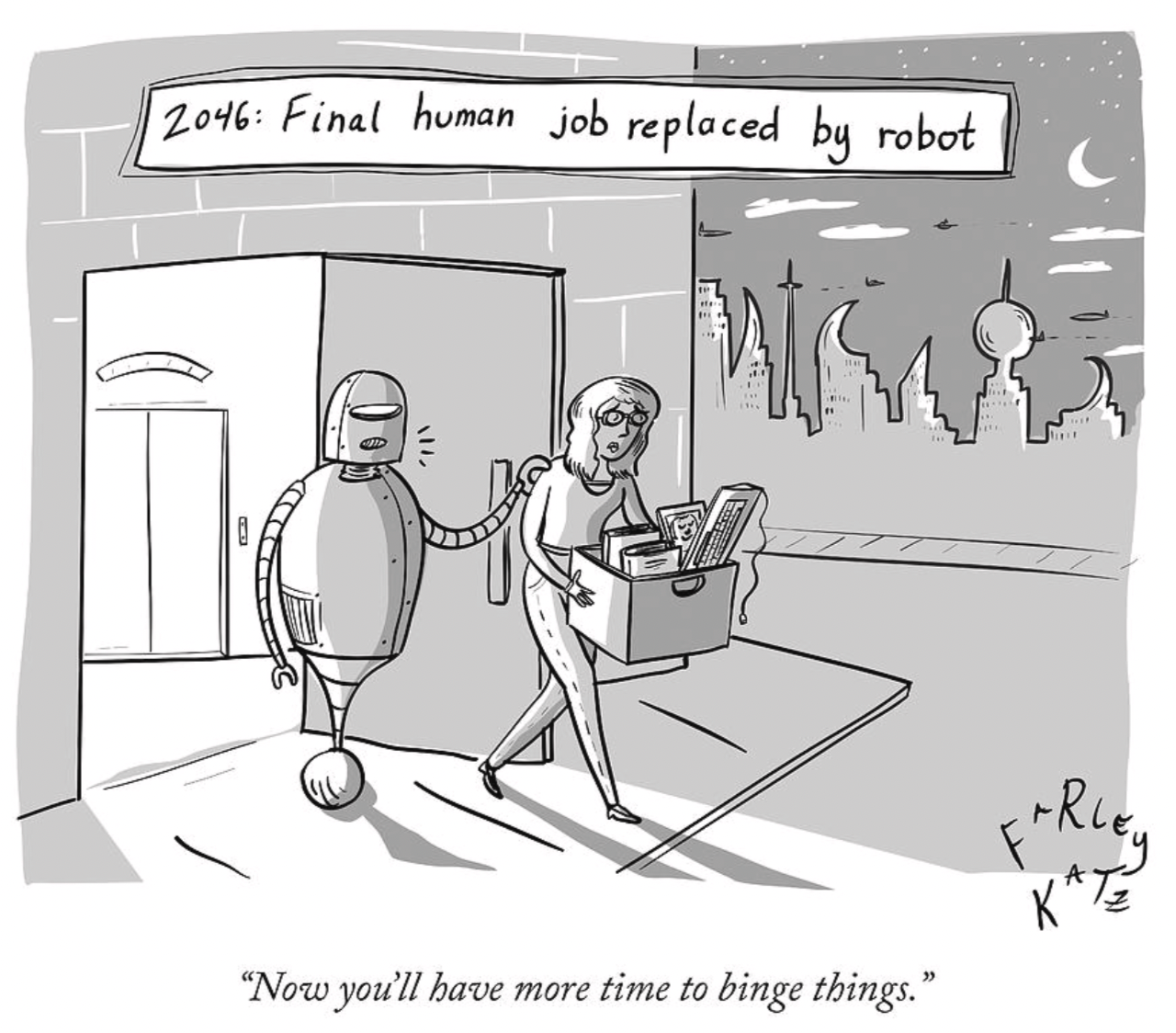

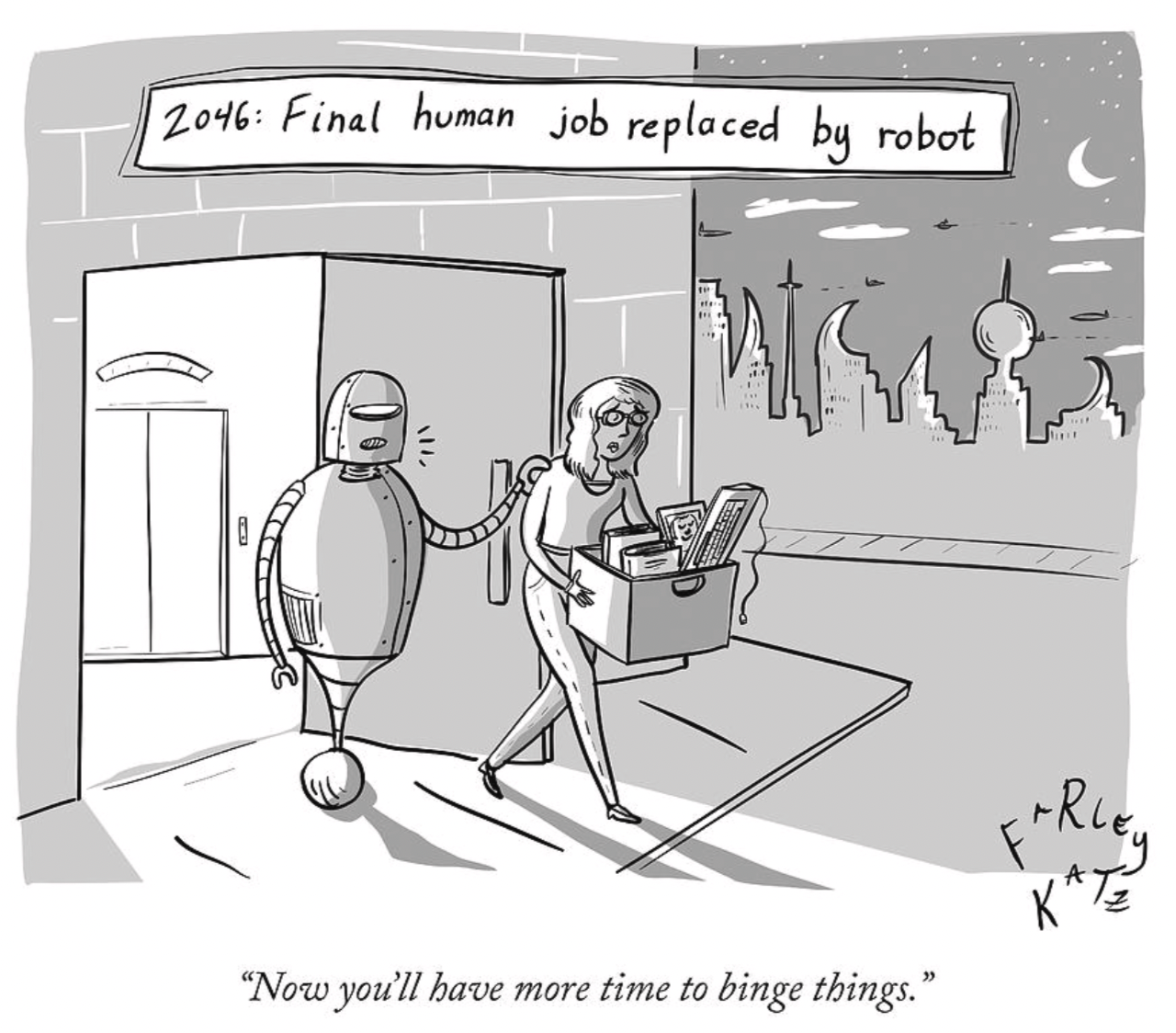

- Document 2 (Cartoon)

- Document 3 (Russell)

- Document 4 (Yang)

- Document 5 (Shead)

- Document 6 (Straub)

Document 1 (Bossman)

Source*: Bossmann, Julia. “Top 9 ethical issues in artificial intelligence.” weforum.org. World Economic Forum, 21 October 2016. Web. 1 March 2021.*

(The following is excerpted from an online article.)

Tech giants such as Alphabet, Amazon, Facebook, IBM and Microsoft – as well as individuals like Stephen Hawking and Elon Musk – believe that now is the right time to talk about the nearly boundless landscape of artificial intelligence. In many ways, this is just as much a new frontier for ethics and risk assessment as it is for emerging technology. So which issues and conversations keep AI experts up at night?

1. Unemployment. What happens after the end of jobs?

- The hierarchy of labour is concerned primarily with automation. As we’ve invented ways to automate jobs, we could create room for people to assume more complex roles, moving from the physical work that dominated the pre-industrial globe to the cognitive labour that characterizes strategic and administrative work in our globalized society…

2. Inequality. How do we distribute the wealth created by machines?

- Our economic system is based on compensation for contribution to the economy, often assessed using an hourly wage. The majority of companies are still dependent on hourly work when it comes to products and services. But by using artificial intelligence, a company can drastically cut down on relying on the human workforce, and this means that revenues will go to fewer people. Consequently, individuals who have ownership in AI-driven companies will make all the money...

3. Humanity. How do machines affect our behaviour and interaction?

- ...Even though not many of us are aware of this, we are already witnesses to how machines can trigger the reward centres in the human brain. Just look at click-bait headlines and video games. These headlines are often optimized with A/B testing, a rudimentary form of algorithmic optimization for content to capture our attention. This and other methods are used to make numerous video and mobile games become addictive. Tech addiction is the new frontier of human dependency...

4. Artificial stupidity. How can we guard against mistakes?

- Intelligence comes from learning, whether you’re human or machine. Systems usually have a training phase in which they "learn" to detect the right patterns and act according to their input. Once a system is fully trained, it can then go into test phase, where it is hit with more examples and we see how it performs.

- Obviously, the training phase cannot cover all possible examples that a system may deal with in the real world. These systems can be fooled in ways that humans wouldn't be. For example, random dot patterns can lead a machine to “see” things that aren’t there. If we rely on AI to bring us into a new world of labour, security and efficiency, we need to ensure that the machine performs as planned, and that people can’t overpower it to use it for their own ends.

5. Racist robots. How do we eliminate AI bias?

- Though artificial intelligence is capable of a speed and capacity of processing that’s far beyond that of humans, it cannot always be trusted to be fair and neutral. Google and its parent company Alphabet are one of the leaders when it comes to artificial intelligence, as seen in Google’s Photos service, where AI is used to identify people, objects and scenes. But it can go wrong, such as when a camera missed the mark on racial sensitivity, or when a software used to predict future criminals showed bias against black people.

- We shouldn’t forget that AI systems are created by humans, who can be biased and judgmental. Once again, if used right, or if used by those who strive for social progress, artificial intelligence can become a catalyst for positive change.

6. Security. How do we keep AI safe from adversaries?

- The more powerful a technology becomes, the more can it be used for nefarious reasons as well as good. This applies not only to robots produced to replace human soldiers, or autonomous weapons, but to AI systems that can cause damage if used maliciously. Because these fights won't be fought on the battleground only, cybersecurity will become even more important. After all, we’re dealing with a system that is faster and more capable than us by orders of magnitude.

7. Evil genies. How do we protect against unintended consequences?

- It’s not just adversaries we have to worry about. What if artificial intelligence itself turned against us? This doesn't mean by turning "evil" in the way a human might, or the way AI disasters are depicted in Hollywood movies. Rather, we can imagine an advanced AI system as a "genie in a bottle" that can fulfill wishes, but with terrible unforeseen consequences…

8. Singularity. How do we stay in control of a complex intelligent system?

- ...Human dominance is almost entirely due to our ingenuity and intelligence...

- This poses a serious question about artificial intelligence: will it, one day, have the same advantage over us? We can't rely on just "pulling the plug" either, because a sufficiently advanced machine may anticipate this move and defend itself. This is what some call the “singularity”: the point in time when human beings are no longer the most intelligent beings on earth.

9. Robot rights. How do we define the humane treatment of AI?

- ...Once we consider machines as entities that can perceive, feel and act, it's not a huge leap to ponder their legal status. Should they be treated like animals of comparable intelligence? Will we consider the suffering of "feeling" machines?...

Document 2 (Katz, Cartoon**)**

Source*: Katz, Farley. “Final human job replaced by robot.” The New Yorker. 29 October 2018.*

Document 3 (Russell)

Source*: Russell, Cameron. “A Case for not Regulating the Development of Artificial Intelligence.” towardsdatascience.com. Towards Data Science, 1 April 2019. Web. 1 March 2021.*

(The following is from an online article.)

What comes to mind when you hear the term artificial intelligence? The Terminator? HAL from Space Odyssey? The robotic lieutenant AUTO in Wall-E? Or the death of Elain Herzberg, who was killed by Uber’s self-driving car in Arizona?

Regardless of what immediately pops into your mind, popular culture has portrayed artificial intelligence as the antagonist in many future apocalyptic films, instilling a sense of fear and a cloud of mystery over the true capabilities of artificial intelligence–in many ways, this is reminiscent of the public’s fear of sharks, which was forever changed by the production of Jaws. With all the uneasiness surrounding artificial intelligence, regulators are starting to pay attention, albeit slowly, and beginning to address the concerns of the people.

While artificial intelligence used in the public domain should have certain safeguards to protect the interests of humanity, the development of new algorithms in the private sector should remain unrestricted.

There are four primary reasons cited in the argument for a lack of regulation:

1. Science

- Should we regulate science? Artificial intelligence is a field of science similar to chemistry, physics, and nanotechnology. Never before have we limited human ingenuity; for millennia people have been constantly innovating, eager to develop new technological breakthroughs and have thus improved our overall quality of life. Historically, humans are incessant explorers, from Sir Edmund Hillary to Julius Oppenheimer, and putting limitations on technological progression will inevitably be ineffective...

2. Uneducated Regulators

- Oftentimes, politicians follow the voices of their constituents, as they hope to be re-elected and do not want to tarnish their reputation. However, when the public as a whole is relatively uneducated on the implications of artificial intelligence, policy decisions will be made based on the views of ill-informed citizens. Plus, with the negative portrayal of artificial intelligence in media, many people will be left in the dark regarding the positives: cancer detection, eliminating tedious/monotonous tasks, freeing up more leisure time, and reducing human errors in the workforce. There are an abundance of positives that are hard to immediately recognize; restricting the usage and development of artificial intelligence will minimize these positive effects.

3. A Lack of Creativity

- Until the inception of artificial general intelligence, when an algorithm can complete a human task without explicit instruction and can learn from its mistakes, little creativity comes from computers. Sure, previously unrecognized correlations can be found in data, but novels, songs, and artworks cannot be created with the same artistic mastery and creativity as humans. While some people fear robot humanoids will take over the planet in the future, a computer’s absence of creativity ensures they will not surpass current human capabilities. Simply put, computers are not a threat to human life

4. Global Competition

- If the United States hinders the development of artificial intelligence through regulation, other countries will likely not follow suit, continuing to fund their research. And because future conflicts will be fought primarily over the computer grid, with unmanned vehicles collecting intel and executing missions, being prepared for this worst-case scenario is essential. For example, there are already speculations of the development of algorithms capable of distinguishing civilians from enemies on the battlefield. Whether the United States decides to utilize automated processes in warfare is up to the government’s discretion, but having such technological capability ensures there is a last resort in a situation of dire circumstances. It is better having the technology available than being left defenseless against more dominant opponents. And as the age-old saying goes, “hope for the best, prepare for the worst.”

Document 4 (Yang)

Source*: Yang, Andrew. “Department of Technology.” yang2020.com. Yang 2020, 2020. Web. 1 March 2021.*

(The following is from a political campaign website.)

Americans have seen the negative impact of technology on their lives. From automation that is displacing their jobs to smart phones that are causing unknown psychological issues for our children, Americans are worried about the future, and need to be able to trust the government to play a role in ensuring that we’re prosperous and innovative, but also safe. Technology can bring about new levels of prosperity, but it also holds the potential to disrupt our economies, ruin lives throughout several generations, and, if experts such as Stephen Hawking and Elon Musk are to be believed, destroy humanity.

Technology is advancing at a pace never before seen in human history, and even those developing it don’t fully understand how it works or what direction it’s taking. Recent advances in machine learning have shown that a computer, given certain directives, can learn tasks much faster than humans thought possible even a year ago.

The level of technological fluency that members of our government has shown has created justified fears in the minds of Americans that the government isn’t equipped to create a regulatory system that’s designed to protect them. We’re heading into this new world with a regulatory system that’s designed for technology that’s much less sophisticated than what we’re facing in the near future.

Technological innovation shouldn’t be stopped, but it should be monitored and analyzed to make sure we don’t move past a point of no return. This will require cooperation between the government and private industry to ensure that developing technologies can continue to improve our lives without destroying them. We need a federal government department, with a cabinet-level secretary, that is in charge of leading technological regulation in the 21st century.

Document 5 (Shead)

Source*: Shead, Sam. “Elon Musk says DeepMind is his ‘ top concern’ when it comes to A.I.” cnbc.com. CNBC, 29 July 2020. Web. 1 March 2021.*

(The following is excerpted from an article from a popular news website.)

Elon Musk believes that London research lab DeepMind is a “top concern” when it comes to artificial intelligence.

DeepMind was acquired by Google in 2014 for a reported $600 million. The research lab, led by chief executive Demis Hassabis, is best-known for developing AI systems that can play games better than any human.

“Just the nature of the AI that they’re building is one that crushes all humans at all games,” Musk told The New York Times in an interview published on Saturday. “I mean, it’s basically the plotline in ‘War Games.’” ...

Musk has repeatedly warned that AI will soon become just as smart as humans and said that when it does we should all be scared because humanity’s very existence is at stake.

The tech billionaire, who profited from an early investment in DeepMind, told The New York Times that his experience of working with AI at Tesla means he is able to say with confidence “that we’re headed toward a situation where AI is vastly smarter than humans.” He said he believes the time frame is less than five years. “That doesn’t mean everything goes to hell in five years. It just means that things get unstable or weird,” he said.

Musk co-founded the OpenAI research lab in San Francisco in 2015, one year after Google acquired DeepMind. Set up with an initial $1 billion pledge that was later matched by Microsoft, OpenAI says its mission is to ensure AI benefits all of humanity. In February 2018, Musk left the OpenAI board but he continues to donate and advise the organization.

Musk has been sounding the alarm on AI for years and his views contrast with many AI researchers working in the field...

Document 6 (Straub)

Source*: Straub, Jeremy. “Elon Musk is wrong about regulating artificial intelligence.” marketwatch.com. Market Watch, 7 January 2018. Web. 1 March 2021.*

(The following is excerpted from an online article.)

Some people are afraid that heavily armed artificially intelligent robots might take over the world, enslaving humanity — or perhaps exterminating us.

These people, including tech-industry billionaire Elon Musk and eminent physicist Stephen Hawking, say artificial intelligence technology needs to be regulated to manage the risks. But Microsoft founder Bill Gates and Facebook’s Mark Zuckerberg disagree, saying the technology is not nearly advanced enough for those worries to be realistic.

As someone who researches how AI works in robotic decision-making, drones and self-driving vehicles, I’ve seen how beneficial it can be. I’ve developed AI software that lets robots working in teams make individual decisions, as part of collective efforts to explore and solve problems.

Researchers are already subject to existing rules, regulations and laws designed to protect public safety. Imposing further limitations risks reducing the potential for innovation with AI systems.

While the term “artificial intelligence” may conjure fantastical images of humanlike robots, most people have encountered AI before. It helps us find similar products while shopping, offers movie and TV recommendations and helps us search for websites. It grades student writing, provides personalized tutoring and even recognizes objects carried through airport scanners.

In each case, AI makes things easier for humans. For example, the AI software I developed could be used to plan and execute a search of a field for a plant or animal as part of a science experiment. But even as the AI frees people from doing this work, it is still basing its actions on human decisions and goals about where to search and what to look for.

In areas like these and many others, AI has the potential to do far more good than harm — if used properly. But I don’t believe additional regulations are currently needed. There are already laws on the books of nations, states and towns governing civil and criminal liabilities for harmful actions. Our drones, for example, must obey FAA regulations, while the self-driving car AI must obey regular traffic laws to operate on public roadways.

Existing laws also cover what happens if a robot injures or kills a person, even if the injury is accidental and the robot’s programmer or operator isn’t criminally responsible. While lawmakers and regulators may need to refine responsibility for AI systems’ actions as technology advances, creating regulations beyond those that already exist could prohibit or slow the development of capabilities that would be overwhelmingly beneficial.

<< Hide Menu

Synthesis 1 (Artificial Intelligence)

12 min read•june 18, 2024

AP English Language Free Response Synthesis for Artificial Intelligence

👋 Welcome to the AP English Lang FRQ: Synthesis 1 (Artificial Intelligence). These are LOOOOONG questions, so grab some paper and a pencil, or open up a blank page on your computer.

⚠️ (Unfortunately, we don't have an Answers Guide or Rubric for this question, but it can give you an idea of how a Synthesis FRQ might show up on the exam.)

⏱ The AP English Language exam has 3 free-response questions, and you will be given 2 hours and 15 minutes to complete the FRQ section, which includes a 15-minute reading period. (This means you should give yourself ~15 minutes to read the documents and ~40 minutes to draft your response.)

- 😩 Getting stumped halfway through answering? Look through all of the available resources on Synthesis.

- 🤝 Prefer to study with other students working on the same topic? Join a group in Hours.

Setup

In the 21st century, artificial intelligence (A.I.) technology has grown rapidly in regards to both its societal reach and its capabilities. While many scientists and politicians warn of the dangers of unchecked artificial intelligence, others believe that A.I. should not be regulated by the government.

Guidelines

Carefully read the following six sources, including the introductory information for each source. Write an essay that synthesizes material from at least three of the sources and develops your position on the extent to which artificial intelligence should be regulated.

- Document 1 (Bossman)

- Document 2 (Cartoon)

- Document 3 (Russell)

- Document 4 (Yang)

- Document 5 (Shead)

- Document 6 (Straub)

Document 1 (Bossman)

Source*: Bossmann, Julia. “Top 9 ethical issues in artificial intelligence.” weforum.org. World Economic Forum, 21 October 2016. Web. 1 March 2021.*

(The following is excerpted from an online article.)

Tech giants such as Alphabet, Amazon, Facebook, IBM and Microsoft – as well as individuals like Stephen Hawking and Elon Musk – believe that now is the right time to talk about the nearly boundless landscape of artificial intelligence. In many ways, this is just as much a new frontier for ethics and risk assessment as it is for emerging technology. So which issues and conversations keep AI experts up at night?

1. Unemployment. What happens after the end of jobs?

- The hierarchy of labour is concerned primarily with automation. As we’ve invented ways to automate jobs, we could create room for people to assume more complex roles, moving from the physical work that dominated the pre-industrial globe to the cognitive labour that characterizes strategic and administrative work in our globalized society…

2. Inequality. How do we distribute the wealth created by machines?

- Our economic system is based on compensation for contribution to the economy, often assessed using an hourly wage. The majority of companies are still dependent on hourly work when it comes to products and services. But by using artificial intelligence, a company can drastically cut down on relying on the human workforce, and this means that revenues will go to fewer people. Consequently, individuals who have ownership in AI-driven companies will make all the money...

3. Humanity. How do machines affect our behaviour and interaction?

- ...Even though not many of us are aware of this, we are already witnesses to how machines can trigger the reward centres in the human brain. Just look at click-bait headlines and video games. These headlines are often optimized with A/B testing, a rudimentary form of algorithmic optimization for content to capture our attention. This and other methods are used to make numerous video and mobile games become addictive. Tech addiction is the new frontier of human dependency...

4. Artificial stupidity. How can we guard against mistakes?

- Intelligence comes from learning, whether you’re human or machine. Systems usually have a training phase in which they "learn" to detect the right patterns and act according to their input. Once a system is fully trained, it can then go into test phase, where it is hit with more examples and we see how it performs.

- Obviously, the training phase cannot cover all possible examples that a system may deal with in the real world. These systems can be fooled in ways that humans wouldn't be. For example, random dot patterns can lead a machine to “see” things that aren’t there. If we rely on AI to bring us into a new world of labour, security and efficiency, we need to ensure that the machine performs as planned, and that people can’t overpower it to use it for their own ends.

5. Racist robots. How do we eliminate AI bias?

- Though artificial intelligence is capable of a speed and capacity of processing that’s far beyond that of humans, it cannot always be trusted to be fair and neutral. Google and its parent company Alphabet are one of the leaders when it comes to artificial intelligence, as seen in Google’s Photos service, where AI is used to identify people, objects and scenes. But it can go wrong, such as when a camera missed the mark on racial sensitivity, or when a software used to predict future criminals showed bias against black people.

- We shouldn’t forget that AI systems are created by humans, who can be biased and judgmental. Once again, if used right, or if used by those who strive for social progress, artificial intelligence can become a catalyst for positive change.

6. Security. How do we keep AI safe from adversaries?

- The more powerful a technology becomes, the more can it be used for nefarious reasons as well as good. This applies not only to robots produced to replace human soldiers, or autonomous weapons, but to AI systems that can cause damage if used maliciously. Because these fights won't be fought on the battleground only, cybersecurity will become even more important. After all, we’re dealing with a system that is faster and more capable than us by orders of magnitude.

7. Evil genies. How do we protect against unintended consequences?

- It’s not just adversaries we have to worry about. What if artificial intelligence itself turned against us? This doesn't mean by turning "evil" in the way a human might, or the way AI disasters are depicted in Hollywood movies. Rather, we can imagine an advanced AI system as a "genie in a bottle" that can fulfill wishes, but with terrible unforeseen consequences…

8. Singularity. How do we stay in control of a complex intelligent system?

- ...Human dominance is almost entirely due to our ingenuity and intelligence...

- This poses a serious question about artificial intelligence: will it, one day, have the same advantage over us? We can't rely on just "pulling the plug" either, because a sufficiently advanced machine may anticipate this move and defend itself. This is what some call the “singularity”: the point in time when human beings are no longer the most intelligent beings on earth.

9. Robot rights. How do we define the humane treatment of AI?

- ...Once we consider machines as entities that can perceive, feel and act, it's not a huge leap to ponder their legal status. Should they be treated like animals of comparable intelligence? Will we consider the suffering of "feeling" machines?...

Document 2 (Katz, Cartoon**)**

Source*: Katz, Farley. “Final human job replaced by robot.” The New Yorker. 29 October 2018.*

Document 3 (Russell)

Source*: Russell, Cameron. “A Case for not Regulating the Development of Artificial Intelligence.” towardsdatascience.com. Towards Data Science, 1 April 2019. Web. 1 March 2021.*

(The following is from an online article.)

What comes to mind when you hear the term artificial intelligence? The Terminator? HAL from Space Odyssey? The robotic lieutenant AUTO in Wall-E? Or the death of Elain Herzberg, who was killed by Uber’s self-driving car in Arizona?

Regardless of what immediately pops into your mind, popular culture has portrayed artificial intelligence as the antagonist in many future apocalyptic films, instilling a sense of fear and a cloud of mystery over the true capabilities of artificial intelligence–in many ways, this is reminiscent of the public’s fear of sharks, which was forever changed by the production of Jaws. With all the uneasiness surrounding artificial intelligence, regulators are starting to pay attention, albeit slowly, and beginning to address the concerns of the people.

While artificial intelligence used in the public domain should have certain safeguards to protect the interests of humanity, the development of new algorithms in the private sector should remain unrestricted.

There are four primary reasons cited in the argument for a lack of regulation:

1. Science

- Should we regulate science? Artificial intelligence is a field of science similar to chemistry, physics, and nanotechnology. Never before have we limited human ingenuity; for millennia people have been constantly innovating, eager to develop new technological breakthroughs and have thus improved our overall quality of life. Historically, humans are incessant explorers, from Sir Edmund Hillary to Julius Oppenheimer, and putting limitations on technological progression will inevitably be ineffective...

2. Uneducated Regulators

- Oftentimes, politicians follow the voices of their constituents, as they hope to be re-elected and do not want to tarnish their reputation. However, when the public as a whole is relatively uneducated on the implications of artificial intelligence, policy decisions will be made based on the views of ill-informed citizens. Plus, with the negative portrayal of artificial intelligence in media, many people will be left in the dark regarding the positives: cancer detection, eliminating tedious/monotonous tasks, freeing up more leisure time, and reducing human errors in the workforce. There are an abundance of positives that are hard to immediately recognize; restricting the usage and development of artificial intelligence will minimize these positive effects.

3. A Lack of Creativity

- Until the inception of artificial general intelligence, when an algorithm can complete a human task without explicit instruction and can learn from its mistakes, little creativity comes from computers. Sure, previously unrecognized correlations can be found in data, but novels, songs, and artworks cannot be created with the same artistic mastery and creativity as humans. While some people fear robot humanoids will take over the planet in the future, a computer’s absence of creativity ensures they will not surpass current human capabilities. Simply put, computers are not a threat to human life

4. Global Competition

- If the United States hinders the development of artificial intelligence through regulation, other countries will likely not follow suit, continuing to fund their research. And because future conflicts will be fought primarily over the computer grid, with unmanned vehicles collecting intel and executing missions, being prepared for this worst-case scenario is essential. For example, there are already speculations of the development of algorithms capable of distinguishing civilians from enemies on the battlefield. Whether the United States decides to utilize automated processes in warfare is up to the government’s discretion, but having such technological capability ensures there is a last resort in a situation of dire circumstances. It is better having the technology available than being left defenseless against more dominant opponents. And as the age-old saying goes, “hope for the best, prepare for the worst.”

Document 4 (Yang)

Source*: Yang, Andrew. “Department of Technology.” yang2020.com. Yang 2020, 2020. Web. 1 March 2021.*

(The following is from a political campaign website.)

Americans have seen the negative impact of technology on their lives. From automation that is displacing their jobs to smart phones that are causing unknown psychological issues for our children, Americans are worried about the future, and need to be able to trust the government to play a role in ensuring that we’re prosperous and innovative, but also safe. Technology can bring about new levels of prosperity, but it also holds the potential to disrupt our economies, ruin lives throughout several generations, and, if experts such as Stephen Hawking and Elon Musk are to be believed, destroy humanity.

Technology is advancing at a pace never before seen in human history, and even those developing it don’t fully understand how it works or what direction it’s taking. Recent advances in machine learning have shown that a computer, given certain directives, can learn tasks much faster than humans thought possible even a year ago.

The level of technological fluency that members of our government has shown has created justified fears in the minds of Americans that the government isn’t equipped to create a regulatory system that’s designed to protect them. We’re heading into this new world with a regulatory system that’s designed for technology that’s much less sophisticated than what we’re facing in the near future.

Technological innovation shouldn’t be stopped, but it should be monitored and analyzed to make sure we don’t move past a point of no return. This will require cooperation between the government and private industry to ensure that developing technologies can continue to improve our lives without destroying them. We need a federal government department, with a cabinet-level secretary, that is in charge of leading technological regulation in the 21st century.

Document 5 (Shead)

Source*: Shead, Sam. “Elon Musk says DeepMind is his ‘ top concern’ when it comes to A.I.” cnbc.com. CNBC, 29 July 2020. Web. 1 March 2021.*

(The following is excerpted from an article from a popular news website.)

Elon Musk believes that London research lab DeepMind is a “top concern” when it comes to artificial intelligence.

DeepMind was acquired by Google in 2014 for a reported $600 million. The research lab, led by chief executive Demis Hassabis, is best-known for developing AI systems that can play games better than any human.

“Just the nature of the AI that they’re building is one that crushes all humans at all games,” Musk told The New York Times in an interview published on Saturday. “I mean, it’s basically the plotline in ‘War Games.’” ...

Musk has repeatedly warned that AI will soon become just as smart as humans and said that when it does we should all be scared because humanity’s very existence is at stake.

The tech billionaire, who profited from an early investment in DeepMind, told The New York Times that his experience of working with AI at Tesla means he is able to say with confidence “that we’re headed toward a situation where AI is vastly smarter than humans.” He said he believes the time frame is less than five years. “That doesn’t mean everything goes to hell in five years. It just means that things get unstable or weird,” he said.

Musk co-founded the OpenAI research lab in San Francisco in 2015, one year after Google acquired DeepMind. Set up with an initial $1 billion pledge that was later matched by Microsoft, OpenAI says its mission is to ensure AI benefits all of humanity. In February 2018, Musk left the OpenAI board but he continues to donate and advise the organization.

Musk has been sounding the alarm on AI for years and his views contrast with many AI researchers working in the field...

Document 6 (Straub)

Source*: Straub, Jeremy. “Elon Musk is wrong about regulating artificial intelligence.” marketwatch.com. Market Watch, 7 January 2018. Web. 1 March 2021.*

(The following is excerpted from an online article.)

Some people are afraid that heavily armed artificially intelligent robots might take over the world, enslaving humanity — or perhaps exterminating us.

These people, including tech-industry billionaire Elon Musk and eminent physicist Stephen Hawking, say artificial intelligence technology needs to be regulated to manage the risks. But Microsoft founder Bill Gates and Facebook’s Mark Zuckerberg disagree, saying the technology is not nearly advanced enough for those worries to be realistic.

As someone who researches how AI works in robotic decision-making, drones and self-driving vehicles, I’ve seen how beneficial it can be. I’ve developed AI software that lets robots working in teams make individual decisions, as part of collective efforts to explore and solve problems.

Researchers are already subject to existing rules, regulations and laws designed to protect public safety. Imposing further limitations risks reducing the potential for innovation with AI systems.

While the term “artificial intelligence” may conjure fantastical images of humanlike robots, most people have encountered AI before. It helps us find similar products while shopping, offers movie and TV recommendations and helps us search for websites. It grades student writing, provides personalized tutoring and even recognizes objects carried through airport scanners.

In each case, AI makes things easier for humans. For example, the AI software I developed could be used to plan and execute a search of a field for a plant or animal as part of a science experiment. But even as the AI frees people from doing this work, it is still basing its actions on human decisions and goals about where to search and what to look for.

In areas like these and many others, AI has the potential to do far more good than harm — if used properly. But I don’t believe additional regulations are currently needed. There are already laws on the books of nations, states and towns governing civil and criminal liabilities for harmful actions. Our drones, for example, must obey FAA regulations, while the self-driving car AI must obey regular traffic laws to operate on public roadways.

Existing laws also cover what happens if a robot injures or kills a person, even if the injury is accidental and the robot’s programmer or operator isn’t criminally responsible. While lawmakers and regulators may need to refine responsibility for AI systems’ actions as technology advances, creating regulations beyond those that already exist could prohibit or slow the development of capabilities that would be overwhelmingly beneficial.

© 2024 Fiveable Inc. All rights reserved.